The brain of a vertebrate is the most complex organ of its body. In a typical human the cerebral cortex (the largest part) is estimated to contain 15 to 33 billion neurons, each connected by synapses to several thousand other neurons. These neurons communicate with one another by means of long protoplasmic fibers called axons, which carry trains of signal pulses called action potentials to distant parts of the brain or body targeting specific recipient cells. Much of what we know of the brain is based on factual data from observed workings from MRI scans or post-mortem examination.

In many philosophies, the conscious mind is considered to be a separate entity, existing in a parallel realm not described by physical law. Some people claim that this idea gains support from the description of the physical world provided by quantum mechanics. A recent theory of cognitive computation trys to merge silicon based computer model with human like cognitive thinking. Under the paradigm called Memory-prediction framework.

The memory-prediction framework is a theory of brain function that was created by Jeff Hawkins and described in his 2004 book On Intelligence. This theory concerns the role of the mammalian neocortex and its associations with the hippocampus and the thalamus in matching sensory inputs to stored memory patterns and how this process leads to predictions of what will happen in the future.

The memory-prediction framework provides a unified basis for thinking about the adaptive control of complex behavior. Although certain brain structures are identified as participants in the core 'algorithm' of prediction-from-memory, these details are less important than the set of principles that are proposed as basis for all high-level cognitive processing.

The central concept of the memory-prediction framework is that bottom-up inputs are matched in a hierarchy of recognition, and evoke a series of top-down expectations encoded as potentiations. These expectations interact with the bottom-up signals to both analyse those inputs and generate predictions of subsequent expected inputs. Each hierarchy level remembers frequently observed temporal sequences of input patterns and generates labels or 'names' for these sequences.

When an input sequence matches a memorized sequence at a given layer of the hierarchy, a label or 'name' is propagated up the hierarchy - thus eliminating details at higher levels and enabling them to learn higher-order sequences. This process produces increased invariance at higher levels. Higher levels predict future input by matching partial sequences and projecting their expectations to the lower levels. However, when a mismatch between input and memorized/predicted sequences occurs, a more complete representation propagates upwards. This causes alternative 'interpretations' to be activated at higher levels, which in turn generates other predictions at lower levels.

In other words a brain will recognize a pattern from its sensors and will remember it in future events, until a new set of patterns creates extra memories allowing the brain to act appropriately to whatever patterns it sees. But unlike the new model of thinking the human brain will have ethics and personnel tastes, or the ability to back out of something based on hunches and personnel comfort.

Traditionally a computer does its work sequentially for the most part and is run by a clock. The clock, like a conductor in a band that drives every instruction and piece of data to its next location. As clock rates increase to drive data faster, power consumption goes up dramatically, and even at rest these machines need a lot of electricity. More importantly, computers have to be programmed. They are hard wired and fault prone. They are good at executing defined algorithms and performing analytics. With $41 million in funding from the Defense Advanced Research Projects Agency (DARPA), the scientists at the Almaden lab set out to make a brain in a project called Systems of Neuromorphic Adaptive Plastic Scalable Electronics (SyNAPSE).

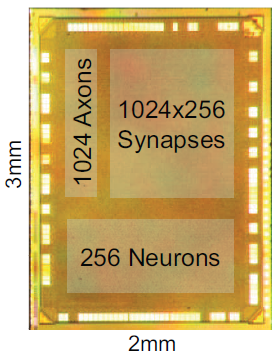

IBM's "neurosynaptic computing chip" features a silicon core capable of digitally replicating the brain's neurons, synapses and axons. To achieve this, researchers took a dramatic departure from the conventional von Neumann computer architecture, which links internal memory and a processor with a single data channel. This structure allows for data to be transmitted at high, but limited rates, and isn't especially power efficient especially for more sophisticated, scaled-up systems. Instead, IBM integrated memory directly within its processors, wedding hardware with software in a design that more closely resembles the brain's cognitive structure. This severely limits data transfer speeds, but allows the system to execute multiple processes in parallel (much like humans do), while minimizing power usage. IBM's two prototypes have already demonstrated the ability to navigate, recognize patterns and classify objects, though the long-term goal is to create a smaller, low-power chip that can analyze more complex data to learn.

IBM's "neurosynaptic computing chip" features a silicon core capable of digitally replicating the brain's neurons, synapses and axons. To achieve this, researchers took a dramatic departure from the conventional von Neumann computer architecture, which links internal memory and a processor with a single data channel. This structure allows for data to be transmitted at high, but limited rates, and isn't especially power efficient especially for more sophisticated, scaled-up systems. Instead, IBM integrated memory directly within its processors, wedding hardware with software in a design that more closely resembles the brain's cognitive structure. This severely limits data transfer speeds, but allows the system to execute multiple processes in parallel (much like humans do), while minimizing power usage. IBM's two prototypes have already demonstrated the ability to navigate, recognize patterns and classify objects, though the long-term goal is to create a smaller, low-power chip that can analyze more complex data to learn.

IBM project led by Dharmendra S Modha with researchers from five universities and the Lawrence Berkeley National Laboratory. Dubbed “Blue Matter,” a software platform for neuroscience modeling, it pulls together archived magnetic resonance imaging (MRI) scan data and assembles it on a Blue Gene/P Supercomputer. IBM has essentially simulated a brain with 1 billion neurons and 10 trillion synapses one they claim is about the equivalent of a cat’s cortex, or 4.5% of a human brain by May 2009.

IBM project led by Dharmendra S Modha with researchers from five universities and the Lawrence Berkeley National Laboratory. Dubbed “Blue Matter,” a software platform for neuroscience modeling, it pulls together archived magnetic resonance imaging (MRI) scan data and assembles it on a Blue Gene/P Supercomputer. IBM has essentially simulated a brain with 1 billion neurons and 10 trillion synapses one they claim is about the equivalent of a cat’s cortex, or 4.5% of a human brain by May 2009.

Only recently this year, IBM says it has now accomplished this milestone with its new “TrueNorth” system running on the world’s fastest operating supercomputer, the Lawrence Livermore National Lab (LBNL) Blue Gene/Q Sequoia, using 96 racks (1,572,864 processor cores, 1.5 PB memory, 98,304 MPI processes, and 6,291,456 threads). IBM and LBNL achieved an unprecedented scale of 2.084 billion neurosynaptic cores* containing 53×1010 (530 billion) neurons and 1.37×1014 (100 trillion) synapses running only 1542 times slower than real time.

A network with over 2 billion of these neurosynaptic cores that are divided into 77 brain-inspired regions with probabilistic intra-region, which doubles the brain power of "Blue Matter". This new way of short parallel processing will give give rise to intelligent security systems or cars which will assist the driver in navigation light or one day help drive. Cognitive computing is still in its infancy but considering that a possible 86 billion neurons are used in an average brain, the artificial brain will need about 86 large rooms filled with "Blue matter" supercomputers to be a human equivalent. Perhaps the new way of cognitive computing will speed up the singularity or the merging man and machine by reverse engineering the thought processes of a brain. The task now is to further shrink each billion silicon neuron connections to a size no bigger then a small melon and with the energy consumption of a light bulb. With this in mind the likelihood of intelligent robots in the future is possible. As well as that there will need to be a Asimov's no kill rule and probably a rethink of what a soul is...